Hi there @nachenko

Hmm… Someone opening that door again, or should I say “the gate” to the mysterious streaming universe

OK, let’s dive into this…LOL, but first thing first : a little disclaimer here :

- I’m in no way an expert on this subject, the long writing below is based on my own reading, watching, testing & understanding about this complex & often controversial area in audio. It’s a long rant that won’t give you any magic formula to make those numbers showing up as you’d like to each time you upload your music to Streaming Services. It’s simply my own point of view & assumptions. Hopefully highlighting some points ( well, at least trying to do so ) that I think are more important than chasing numbers across series of tests which in the end might not be that relevant…and of course, all of this in my very own English since you already know it’s not my native language

So in a nutshell, take all this with a grain of salt, it’s simply my own approach & reflection on this very interesting subject.

So in a nutshell, take all this with a grain of salt, it’s simply my own approach & reflection on this very interesting subject.

That said, if you’re still willing to open the “Gate”, here it is…

About 2 years ago, this topic on Digital Distribution Loudness raised on the forums. At the time I have to say that I was also quite fascinated & perhaps even obsessed by those numbers & figures and I also spent sometime trying to test & compare things. Without saying it was pointless to do so, I can tell that I’m now less focusing on those numbers, simply because they are all relative & not absolute.

This previous forums discussion probably led to this tutorial from Kirk Degiorgio understanding Loudness and Metering which is a very comprehensive course covering different metering units, the tools we can use to measure them and how to prepare mixes for digital distribution. It’s a very good place to start IMO, but still it doesn’t unveiled what’s really going under the hood with Streaming platforms audio processing. ( BTW if you haven’t done it yet, you can try a full 7 days trial to be able to watch the course, just a side note here, but it would be nice if you could watch it  ).

).

So what’s really going on with Streaming Services and the way they process uploaded music ?

The first thing is converting your music to a suitable streaming format using “Lossy” Codecs, which unfortunately aren’t the same across different platforms, but the reasons to do this are the same. Those platforms have millions of tracks in their catalog, they don’t want their users to reach for the level knob each time a new track plays in. They also have a responsibility & must follow some regulations about “safety” levels and ears & equipment protection. Those 2 reasons are fully self explanatory and make sense, but then comes the real important bit : those platforms stream audio across Internet, therefore they need to use appropriate audio files formats not only to gain storage space ( as we are familiar with digital audio files on computers ) but they also have to care about bandwidth usage in order to deliver a reliable & consistent audio flow to their users. That’s probably where & why those numbers we try to measure & compare are more relative than absolute.

My understanding on this ( and I’m not an expert  ) is that each Streaming Service decides about a loudness target that suits their needs for the above mentioned reasons : even playback levels across tracks, space & ease of streaming with bandwidth consumption in mind. So basically, we or the distributors/aggregators are uploading Lossless audio files which are converted to Lossy audio formats using different Codecs & algorithms. From there they have what we could call a “compliant master” version of your track. Now depending of either the playback device, the network power or simply the users choice for playback quality settings, this “compliant master” isn’t gonna be streamed the same way each time, instead it will take this reference “compliant master” and make a new file from it to achieve the best possible streaming quality : for example a free Spotify listener will be able to playback your track at a maximum bit-rate of 160kbps while a paying premium user will be able to playback your track at a maximum bit-rate of 320kbps, but that’s all absolute numbers here, and again, it will vary depending of the available bandwidth & playback device capacities + chosen settings. Not only this, but a higher bit-rate doesn’t mean that you’ll get higher audio quality in the end, it all depends of the codecs which are being used in first place, and that’s where we fall back to differences between Streaming Services and the way they process uploaded Lossless audio files. Add to this that some major distributors are able to prepare & deliver their own streaming encoded audio files, meaning they will take care to make their own AAC or MP3 “compliant master” files and directly provide those to the Streaming platform, bypassing their Codecs & algorithm processing. Might sounds unfair for the independent artists, but it’s just the way things are.

) is that each Streaming Service decides about a loudness target that suits their needs for the above mentioned reasons : even playback levels across tracks, space & ease of streaming with bandwidth consumption in mind. So basically, we or the distributors/aggregators are uploading Lossless audio files which are converted to Lossy audio formats using different Codecs & algorithms. From there they have what we could call a “compliant master” version of your track. Now depending of either the playback device, the network power or simply the users choice for playback quality settings, this “compliant master” isn’t gonna be streamed the same way each time, instead it will take this reference “compliant master” and make a new file from it to achieve the best possible streaming quality : for example a free Spotify listener will be able to playback your track at a maximum bit-rate of 160kbps while a paying premium user will be able to playback your track at a maximum bit-rate of 320kbps, but that’s all absolute numbers here, and again, it will vary depending of the available bandwidth & playback device capacities + chosen settings. Not only this, but a higher bit-rate doesn’t mean that you’ll get higher audio quality in the end, it all depends of the codecs which are being used in first place, and that’s where we fall back to differences between Streaming Services and the way they process uploaded Lossless audio files. Add to this that some major distributors are able to prepare & deliver their own streaming encoded audio files, meaning they will take care to make their own AAC or MP3 “compliant master” files and directly provide those to the Streaming platform, bypassing their Codecs & algorithm processing. Might sounds unfair for the independent artists, but it’s just the way things are.

Why this rant about those Lossy Codecs you may ask ? Well, because it’s the part that we really can’t avoid anymore. Digital distribution & Streaming Services have now taken over the all music industry, it’s generating millions each day so it’s not gonna draw back, instead, major Streaming services are actually battling hard to develop new Codecs or to provide High Res audio Streaming instead of the actual Lossy Codecs, that’s already happening with Quobuz, Amazon Music HD, Tidal Hifi & Deezer Hifi.

Here are some interesting reading about Streaming Services & Codecs quality BTW.

That’s probably where the new “loudness war” is taking place in this day & age, and let’s face it, the only reason why we bother with those numbers & testings is to try to ensure that our music is gonna be competitive in this new distribution environment. One thing is never gonna change with audio & music and it all has to do with the way human hearing works and the way we perceive sounds which always leads to the same conclusion : louder feels better - period -.

Any honest audio engineer will admit that if he needs to impress clients, he will playback the final mix or master a bit louder and it just works because of perceived loudness & the way our ears & brain process sound, nothing is gonna change this ( unless Elon Musk managed to re-program our brains with his Neuralink  ) and in the end, this is still the pursuit & goals for all producers & mixing engineers : aiming for the maximum perceived loudness possible to make the track stands out from others. When digital audio came out and the max level was set to 0 dBFS, it was a matter of peaking around 0 dBFS without peaking above and it was mainly based on RMS for metering. This isn’t true anymore, we now have to deal with average & perceived loudness and we now have new metering units available such as LUFS.

) and in the end, this is still the pursuit & goals for all producers & mixing engineers : aiming for the maximum perceived loudness possible to make the track stands out from others. When digital audio came out and the max level was set to 0 dBFS, it was a matter of peaking around 0 dBFS without peaking above and it was mainly based on RMS for metering. This isn’t true anymore, we now have to deal with average & perceived loudness and we now have new metering units available such as LUFS.

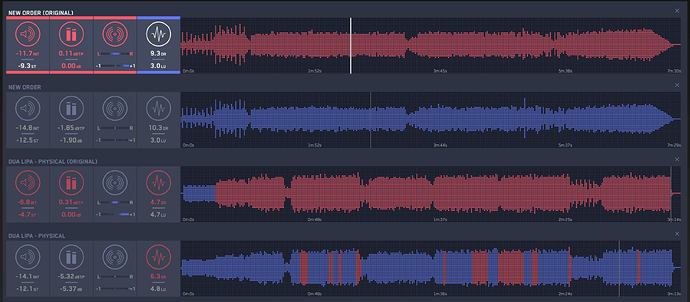

That’s maybe where your research & testing might not be that realistic, because while loudness & dynamic range are linked, they are quite 2 different things in the end. Dynamic range is closely linked to the type & genre of music : a classical piece of music will have a higher dynamic range than most of electronic music, some music really requires heavy compression to sound right & sit in the ballpark against similar tracks, it’s part of the sound we are used to and our ears do quite a good job at referencing music we heard before, something too much different will sound odd to us.

In order to perhaps try to be more accurate with testing, you should perform some null test between your original final lossless track and the resulting lossy codec track on the Streaming platform, by reversing the phase of one track you should be able to hear what the codec did to your original mix. iZotope Ozone also have tools to listen how your Mix will sound once compressed to MP3 or AAC, that can be helpful to. Again, those lossy codecs don’t perform equally, but the principle is based around using psycoacoustics compression and basically tricking our ears by removing inaudible content or content that the algorithm will find masked behind another sound. Those algorithm do a brilliant job and most of the time it results in very light size & easy “stream-able” audio files retaining high audio quality and often difficult to distinguish from CD quality, but I think it’s important to try to understand what those codecs are doing to your music and how it’s possible to minimize artifacts & music degradation, but keeping in mind that you can’t avoid this process anyway.

So while numbers can be used as some points of reference, I’m not sure if this should be the right quest & the best way to try to analyze this. Next question is : does your music really needs to be louder or competitive with others ? Well, that might be the case if you’re into Radio Pop Charts & Club’s Tracks bangers but it’s definitely not something you need for music that is originally more refine, intimate, with subtle nuances & details that you wanted to share with the listener in first place… and to me that’s the all point here : " don’t become comfortably numb " by the numbers you’re reading on your meters.

I believe that it all starts right at the source with mixing, and what ever medium your music is gonna end onto doesn’t really matter. Of course you’ll have to adapt your Mix according to the targeted medium, and yes, you have to care about loudness targets but it’s more a matter of following some “delivery” rules in order to sit in the ballpark rather than finding the magic numbers that will work each time, because each track might just be different.

Key point is to retain contrast while being able to maintain the best tonal balance possible in a Mix IMO. Nothing sounds loud or quiet if everything is loud or quiet, right ? That’s how perceived loudness and human hearing works, we need to identify something as a level reference before being able to tell if something else is louder or quieter. Your best friend might be a classic Vu Meter, your worse enemy a limiter sitting on your master bus thinking that it will keep those peaks & harsh frequencies pops & blips under control. The same goes for important dips, you have to take care of those to retain balance & energy. Since LUFS final numbers reflect an average measurement of the entire duration of the audio track, trying to keep those peaks & dips under control as best as we can is also very important. So in the end, I believe that we need to use both classic RMS metering & Vu Metering. The advantage of Vu Meters is that they have a slow response and therefore they are closer to human hearing and perceived loudness, they will show “energy” more than accurate peak levels, and that’s where we need classic RMS meters to have more precise measurement of harsh transients & picks that Vu Meters fail to display.

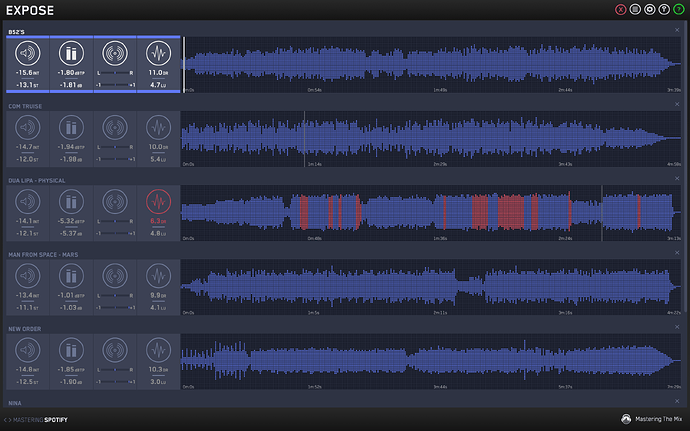

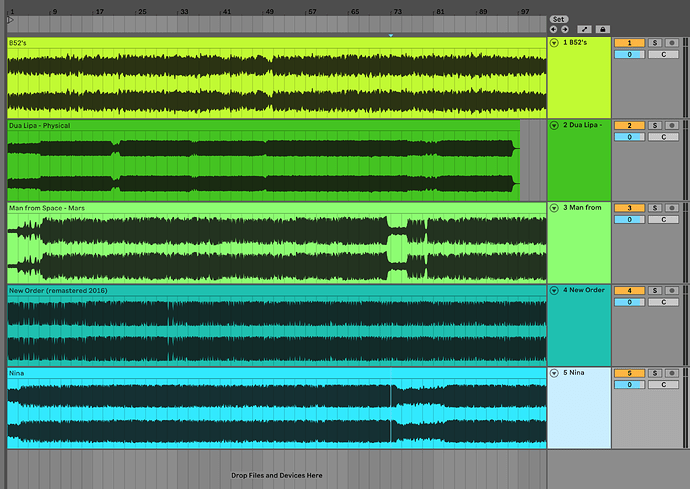

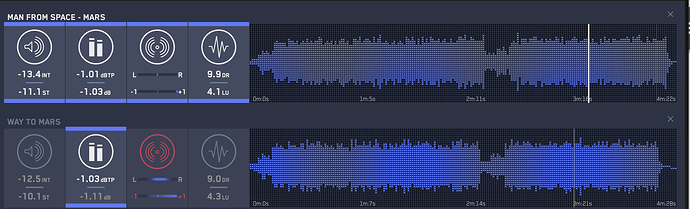

Those peaks & dips are gonna be the main bit of information that will dictate what those lossy codecs will do to your Mix. Funnily enough it could almost be compared to cutting music onto vinyls. Recently I was listening to Dom Kane in one of his Kane Audio AMA Vlog videos series on YT ( which I highly recommend to watch ) and he was telling a story about a label trying to cut one of his tracks onto vinyl but they kept asking him to tweak his final mix because each time they try to cut the track, the needle was cutting to deep & would go through the plate. Because that’s the way contrast & dynamic range is achieved, by cutting less or more deep through the vinyl. All his metering values where looking fine to him, he lower down the final Mix level but still the needle will go through the plate ! Well, in the end it was some peaks in a certain frequency range causing this. Those codecs are similar to this needle, if something is peaking to high they will squash it, so again, getting the best & evenly balance for your final Mix is really key IMO, and talking about Spotify, they also apply a -1dB limiting next to their codecs processing. So the safest way is to aim at their -14 LUFS loudness target and from there level down your Mix of -1dB, but in reality, even if it’s quite a safe practice, it might not do justice to all your mixes, depending of the genre & how you’d like them to sound for the listener. Keeping a lot of Dynamic Range won’t arm your mix in terms of quality but it won’t stand up & be competitive against commercial tracks ( but again, does the Track needs this ? ). If your Mix is welled balance & if you have those peaks & dips under control you could get better results with a final Mix reading a -12 LUFS and peaks not going above 10 dBFS for example. That’s where a good audio engineer can really shine at retaining energy & punch in a Track, even if it’s quite heavily compressed and has less Dynamic Range, and at the same time, he’ll be able to “trick” those codecs and get a final streaming audio file with very competitive perceived loudness. It’s based around EQing, taming & boosting crucial frequencies ranges, channels volume automation, retaining contrast but keeping a quite consistent energy through the all duration of the track.

But OK, enough of my own rant on this for now  I believe that if some people should know something about preparing mixes for Streaming Services, it would probably be mixing & mastering engineers, no ? So here are some videos that I found interesting on the subject.

I believe that if some people should know something about preparing mixes for Streaming Services, it would probably be mixing & mastering engineers, no ? So here are some videos that I found interesting on the subject.

The Future of Mastering: Loudness in the Age of Music Streaming

Mastering for Spotify® and Other Streaming Services | Are You Listening? | S2 Ep4

Loudness in Mastering | Are You Listening? | S2 Ep5

Loudness on Streaming - Into The Lair #167